Industry Knowledge

Binding Big Data with Bounding Box Annotation

Bounding box annotation is one of the commonly used methods in image data annotation.

Data annotation is a key part of AI to help machine learning models make accurate predictions by training the models with quality labeled data. While many types of data annotation are used in machine learning, image data annotation is one of the commonly used methods in training computer vision models.

Various ways to perform image data annotation depend on the use case, with bounding boxes being one of the most efficient methods.

What is bounding box annotation?

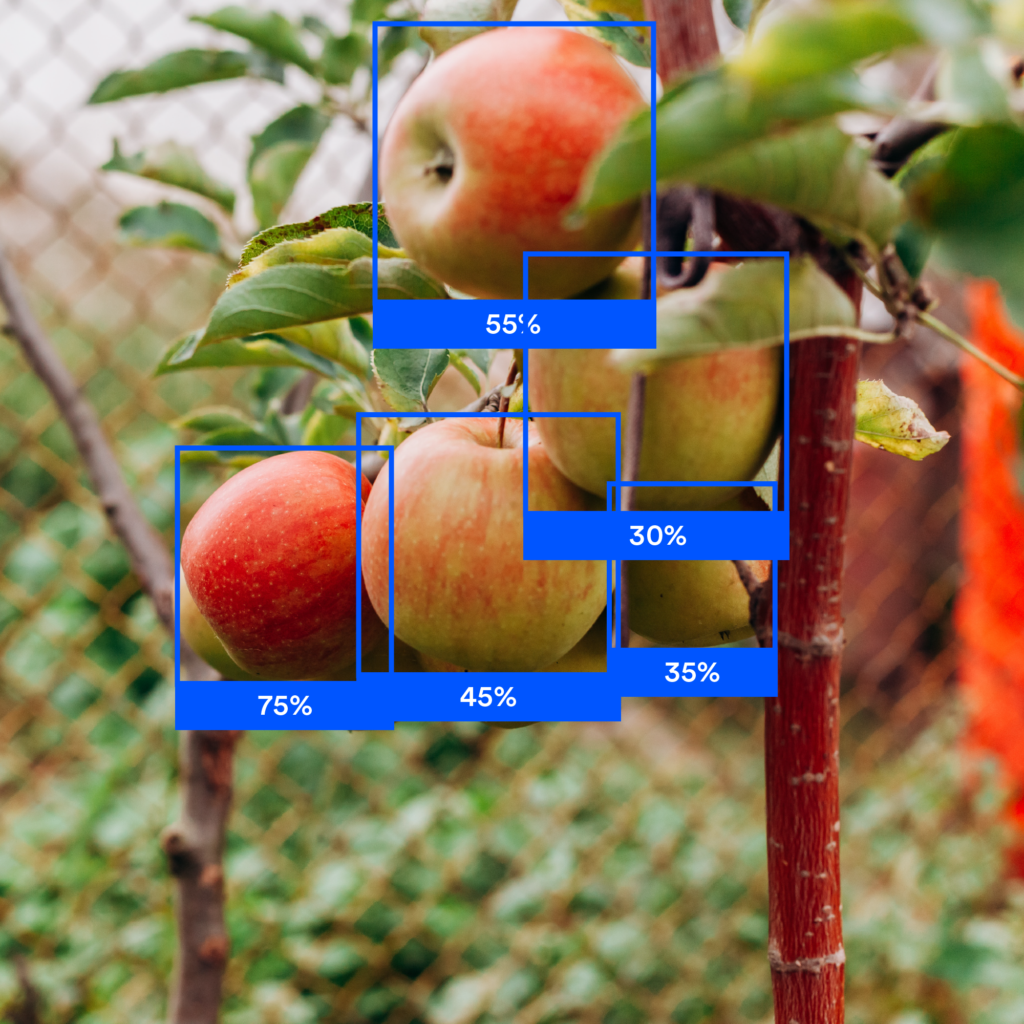

Bounding box annotation uses bounding boxes or rectangular boxes to mark or define certain objects in the given image data. Bounding box annotation makes it easier for machine learning models to perform object detection and localization tasks more efficiently and accurately.1

This can be used to describe the location and size of an object in an image, but it also has other applications. For example, bounding boxes are often used when creating feature detectors for computer vision tasks like image classification or object detection.

For many companies, image data annotation outsourcing guarantees security, accuracy, and efficiency. Outsourcing data annotation projects allow clients to save time, and achieve new heights in productivity, flexibility, and scalability.

Use cases of bounding box annotation

Bounding box annotation is a very crucial step in image data annotation. It helps improve the accuracy of image recognition and enhances the efficiency of object detection, classification, and segmentation processes in images.

Autonomous vehicles: Bounding boxes help detect objects like pedestrians, street signs, traffic lights, etc. that can further train the self-driving models to recognize barriers and make the algorithm accurate for driver’s safety.

eCommerce and Retail: Bounding box annotation is helpful in labeling different products and categories such as fashion accessories, cosmetics, etc. which further makes search results accurate.2

- Anti-theft detection: For physical stores, bounding box annotation helps detect patterns that may lead bad actors to steal goods and products.

Robotics and Drone imagery: Bounding boxes can help detect objects from a distance, like migratory species, damaged roofs, or large pieces, to prevent collisions and accidents.

Damage detection for insurance claims: Vehicle damage, such as broken window glasses, damaged roof, and tail lights, can be easily detected by bounding boxes to help prevent accidents and determine a cost estimate for insurance claims.

Farming: Image data annotation is utilized in the farming sector to predict the state of crops, mainly their growth and cultivation time. This reduces manual labor significantly, giving farmers a more accurate reading without going out to the field.

- Livestock: Animals behave differently around their handlers. Real-time image data annotation helps in recognizing patterns and behaviors without manual work. This could potentially be helpful in protecting livestock from predators and animal attacks.

Best practices for bounding box annotation

Bounding box annotation is a great way to annotate your work, but sometimes it can be tricky to get right. Here are some best practices you can follow:

- Ensuring pixel-perfect tightness: Edges of a bounding box should be as close to the object as possible for precise detection.

- Addressing box size variation: Variations in bounding boxes should be consistent with the size and volume of the object to ensure accurate predictions. It's important not only for consistency but also because different users may interpret the same annotation differently depending on their screen size or environment (e.g., when displayed as an image or thumbnail).

- Reducing box overlap: Objects having a bounding box overlap with another object will affect the accuracy of the model, therefore an overlap should be avoided as much as possible.

- Avoiding diagonal items: Diagonal items take less space in a bounding box, which can confuse the machine learning model as it assumes that the target object is the background.

- Eliminating bias: When it comes to image data annotation, it’s crucial to avoid any biases to ensure the quality and accuracy of the project outcome. Biased data will cause erroneous and unfair results—thus, tainting the project unusable at the very least, and harmful at most.

Outsourcing Bounding Box Annotation to TaskUs

TaskUs is proud to offer the best solution for outsourcing AI services such as computer vision, Natural Language Processing (NLP), and content relevance, on top of our tech-and-human-enable image data annotation process.

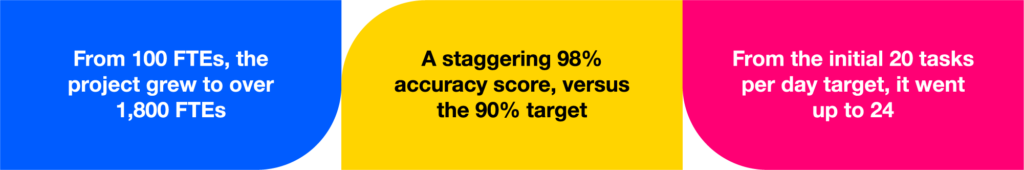

Utilizing our people-first philosophy, decades of experience, and technology led Us to provide Ridiculously Good image data annotation results to one of our clients, which ultimately paved the way to the world’s safest autonomous vehicles.

We believe in the magic of the human touch working alongside our cutting-edge technology and artificial intelligence; that’s why we launched TaskVerse—our very own freelancing platform that allows Us to gather more global talents and provide error-free, diverse, and unbiased data annotation projects.

References

We exist to empower people to deliver Ridiculously Good innovation to the world’s best companies.

Useful Links